Partner with Econify to strategically use full stack, AI, mobile, OTT, and cloud engineering to solve complex challenges

Challenge

Roku developers face significant productivity barriers: fragmented documentation, lack of modern development tools, steep BrightScript learning curve, and limited intelligent code support—leading to 30-40% of development time spent searching for basic information. Econify decided to develop a tool to aid developer workflow.

Goals

The project aimed to modernize Roku development by reducing documentation search time by 60%, accelerating new developer onboarding from weeks to days, and providing intelligent code assistance comparable to other platforms. Strategic objectives included improving overall development velocity by 40-55%, reducing senior developer interruptions, and creating a foundation for scalable Roku development practices.

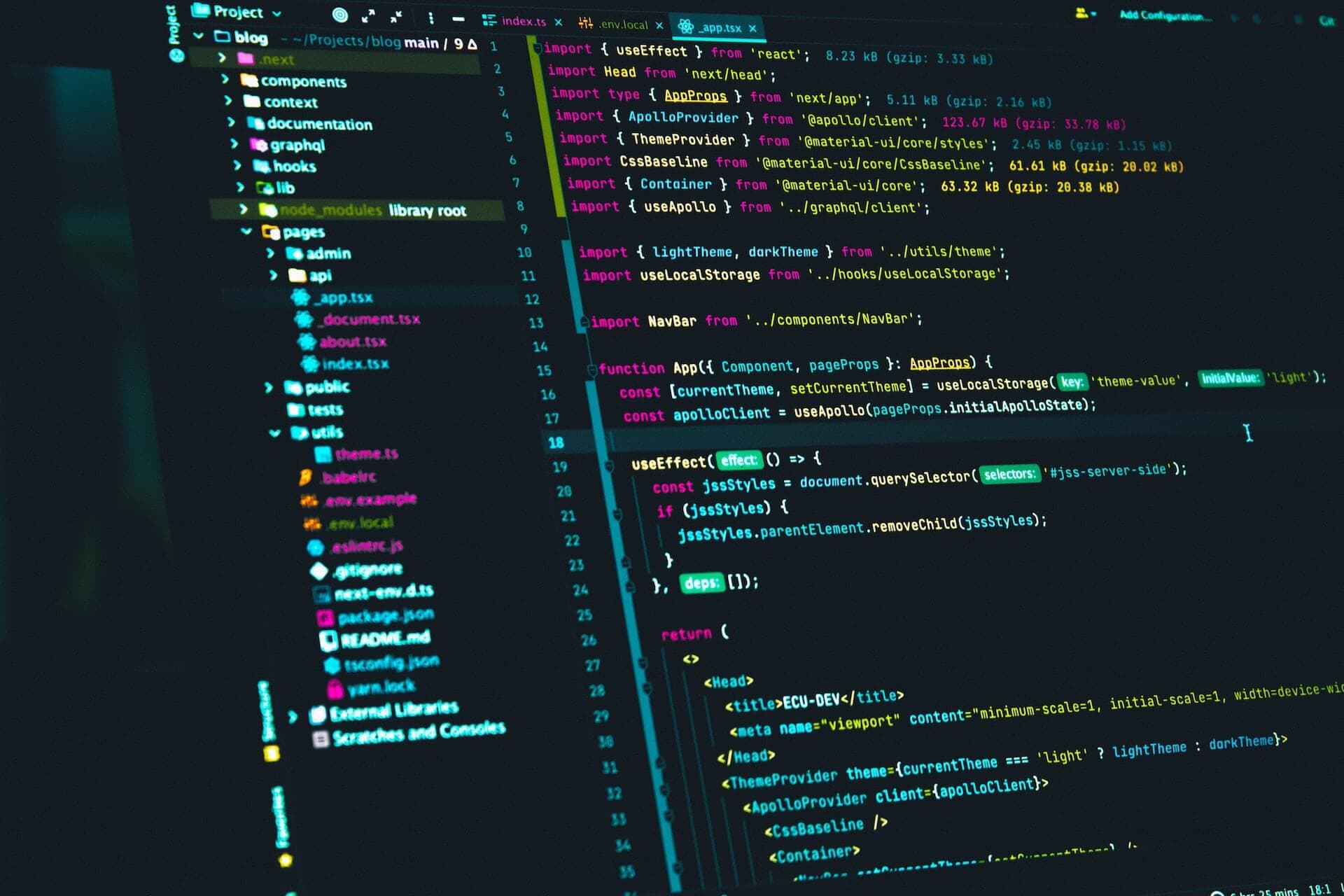

Econify developed an AI-powered developer assistant that seamlessly integrates into existing workflows through a custom-trained LLM specialized in Roku documentation and BrightScript syntax.

Solution

The solution features a VSCode plugin delivering intelligent autocomplete for BrightScript and SceneGraph, paired with natural language Q&A capabilities that provide instant documentation answers. Designed for zero-training deployment, the tool delivers immediate productivity benefits without requiring team onboarding or workflow changes.

- Custom-trained LLM specialized in Roku documentation and BrightScript

- VSCode plugin with intelligent autocomplete for BrightScript/SceneGraph

- Natural language Q&A for instant documentation answers

- Zero-training deployment with immediate productivity benefits

Business Value

The tool transforms development experience with immediate ROI through time savings (up to 15-20 hours/developer/month), accelerated onboarding, and reduced coding errors—while establishing foundation for modern, scalable Roku development practices.

1.3 Million

Lines of BrightScript culled from GitHub for training

17.7 Million

Tokens in training data

10 GB

Size of quantized GGUF model