Partner with Econify to strategically use full stack, AI, mobile, OTT, and cloud engineering to solve complex challenges

Challenge

As Hearst Newspapers (HNP) explored the growing demand for AI-powered tools—like content summarization, semantic search, and customer-facing chat experiences—they needed a scalable, maintainable foundation to unify how internal teams interacted with Large Language Models (LLMs) and vector databases.

Without such a foundation, AI experimentation could quickly become fragmented, expensive, and hard to govern.

Goals

Econify was engaged by the HNP engineering department to create a proof-of-concept internal AI platform gateway that:

Encompassed a set of managed services that would standardize access to LLMs and vector search

Enabled internal teams to build AI-powered applications with minimal friction

Provided centralized observability, cost tracking, and future extensibility

Solution

We built a modular AI “gateway” inside Hearst’s cloud environment. Instead of every team integrating directly with different AI vendors, the gateway offers a small set of easy-to-use services:

- Generate: produce summaries, drafts, and responses from an LLM

- Ingest: add content to a secure internal index for later retrieval

- Retrieve: run smart, meaning-aware search across that content

Everything is accessed through clear, documented endpoints, so developers can plug in quickly. Behind the scenes, the platform is designed to be reliable and efficient, with smart caching to reduce spend, the flexibility to swap model providers in the future, and centralized monitoring so teams can see what’s working and what it costs.

Outcomes

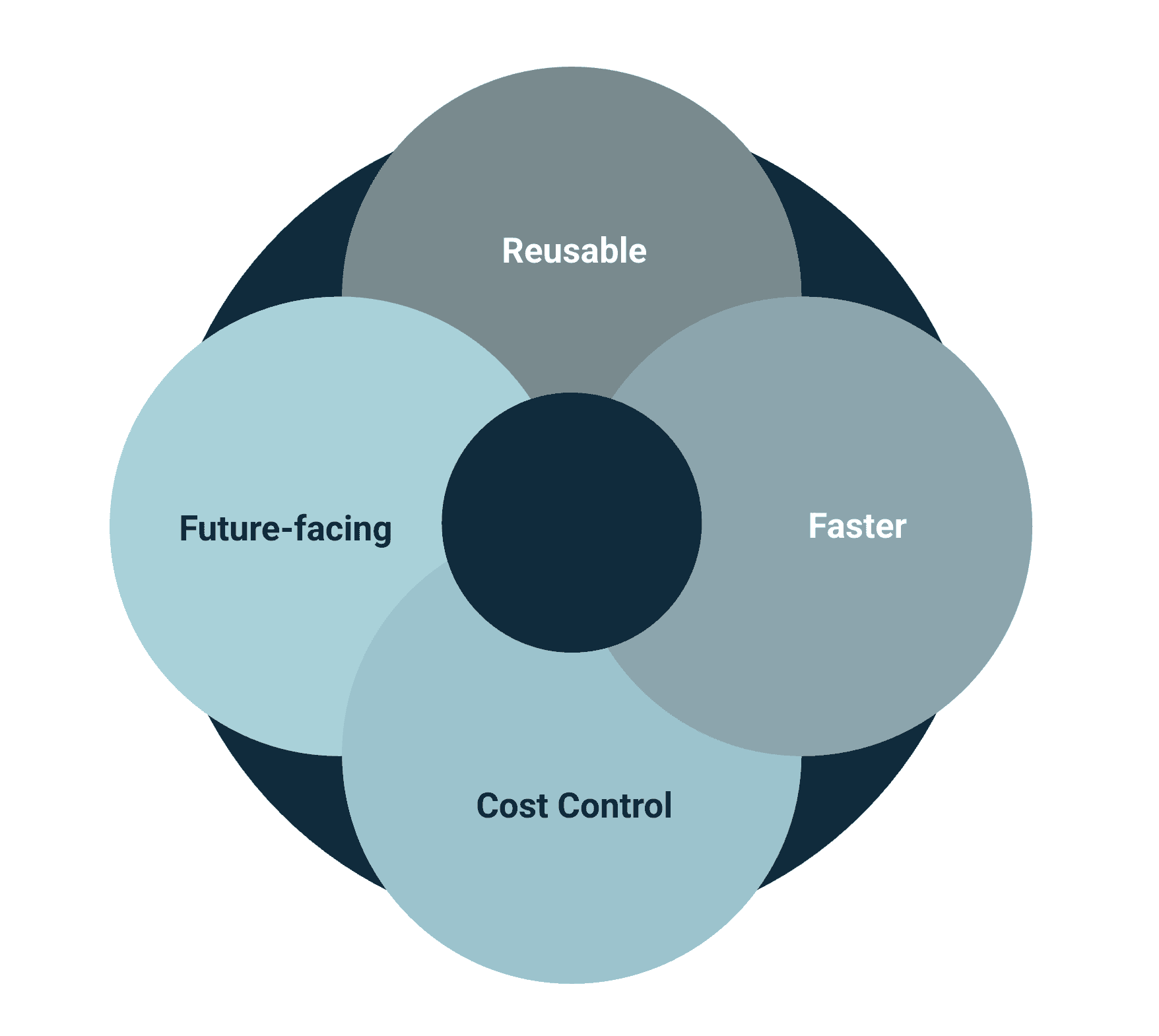

- Reusable AI layer: Multiple products can tap the same foundation—no duplicate effort.

- Faster delivery: Teams ship AI features more quickly with a consistent path from idea to prototype.

- Cost control: Usage and spend are visible, and caching keeps budgets in check.

- Future-proofing: It’s easy to change or add LLM model providers as needs evolve.

Business Value

This AI gateway now better enables Hearst's teams to innovate and build AI enabled products at speed without worrying about infrastructure.